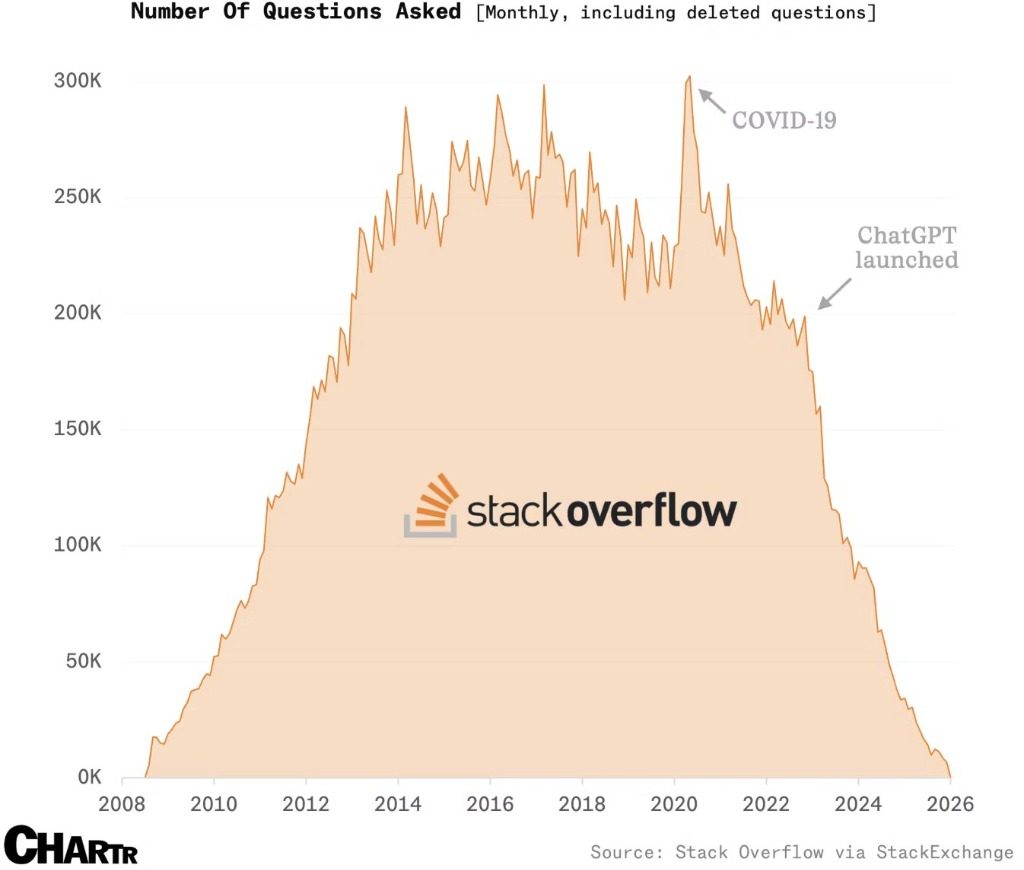

Look at this chart. Really look at it.

From 2008 to 2020, Stack Overflow was the place where developers shared knowledge. 300,000 questions a month at its peak. Every error message, every obscure bug, every "how do I..." question — answered by real humans who had solved real problems.

Then ChatGPT launched in late 2022.

And that mountain became a cliff.

The Numbers Don't Lie

The data is brutal:

- Peak (2017-2020): ~250,000-300,000 questions/month

- Post-ChatGPT (2024): ~50,000 questions/month

- Now (2026): Nearly flatlined

That's not a gradual decline. That's a collapse.

The Delicious Irony

Here's what makes this truly fascinating (and terrifying):

- Stack Overflow's 50+ million Q&A pairs were used to train GPT, Claude, and every major LLM

- Those LLMs became so good that developers stopped going to Stack Overflow

- Stack Overflow is now dying, meaning no new high-quality Q&A data is being generated

- Future LLMs will have no fresh source of this type of data

It's like mold growing on bread. The mold consumes the bread, thrives for a while, then starves when there's nothing left to eat.

Stack Overflow trained its replacement. Its replacement killed it. Now the replacement has no food source.

This is the AI Ouroboros — the snake eating its own tail.

Why This Is a Catastrophe (Not Just for Stack Overflow)

"Who cares? We have ChatGPT now."

I hear you. But think deeper.

Problem 1: Stale Knowledge

LLMs are trained on data up to a cutoff date. Stack Overflow was a living, breathing knowledge base that updated daily. New frameworks, new bugs, new solutions — all documented in real-time by working developers.

That pipeline is broken now.

When a new JavaScript framework drops in 2027 with a weird edge case bug, where does that solution get documented? Where does the next LLM learn about it?

Problem 2: The Quality Gap

Stack Overflow had:

- Community voting — good answers rose, bad ones sank

- Comments and corrections — answers were refined over time

- Reputation systems — incentivized quality contributions

- Moderation — kept spam and nonsense out

LLM-generated answers have none of this. They sound confident but can be subtly wrong. And there's no community to correct them.

Problem 3: The Expertise Drain

Who answered Stack Overflow questions? Senior developers. People who had solved these problems in production. People with battle scars and hard-won knowledge.

Those people aren't writing detailed technical answers anymore. Why would they? It takes 30 minutes to write a great Stack Overflow answer. For free. When an AI can generate a "good enough" response in 3 seconds.

The incentive structure for knowledge sharing is broken.

Problem 4: The Synthetic Data Death Spiral

Here's where it gets really dark.

As Stack Overflow dies, AI companies need new data sources. So what do they do? They start training on AI-generated content.

AI trained on AI.

Studies have shown this leads to "model collapse" — each generation gets slightly worse, slightly more generic, slightly more disconnected from reality.

We're already seeing this. The internet is filling up with AI-generated slop. Medium articles that say nothing. Blog posts that are just regurgitated LLM output. "Content" that exists only to exist.

The signal-to-noise ratio is collapsing.

"But AI Will Solve This!"

Will it though?

Think about what made Stack Overflow valuable:

- Real humans with real problems asking questions

- Real humans with real experience answering them

- Real community validating and improving the answers

- Real-time updates as technology evolved

You can't replicate this with AI. AI answers based on what it's seen before. It doesn't discover new solutions. It doesn't debug novel edge cases. It doesn't invent workarounds for undocumented behavior.

AI is a compression of past human knowledge. It is not a generator of new human knowledge.

The Uncomfortable Truth

We are living through an extinction event for structured technical knowledge sharing.

And almost nobody is talking about it.

The developer community spent 15 years building Stack Overflow into the greatest programming knowledge base in history. Now that knowledge has been extracted, monetized, and the community that created it is dispersing.

We ate the seed corn.

What Happens Next?

I don't have good answers here. But here are some possibilities:

Scenario 1: The Dark Ages

No new knowledge-sharing platforms emerge. Developers become entirely dependent on increasingly stale LLMs. Technical knowledge fragments. Tribal knowledge returns. We effectively lose a decade of progress.

Scenario 2: New Platforms Emerge

Someone builds the "post-AI Stack Overflow" — a platform that somehow incentivizes human knowledge sharing despite LLMs existing. Maybe crypto rewards? Maybe something novel? This is hard because the fundamental incentive problem remains unsolved.

Scenario 3: Corporations Take Over

Companies like Google and Microsoft create "official" documentation that becomes the primary source of truth. AI gets trained on that instead. But this centralizes knowledge in ways that feel dystopian.

Scenario 4: AI Gets Good Enough (Hopefully)

Maybe AI becomes capable of truly novel problem-solving, not just pattern matching. Maybe it can replace human experts entirely. But this is optimistic, and we're not there yet.

My Take

Here's what I think is actually happening:

We're in a transition period where:

- Old knowledge sharing platforms are dying (Stack Overflow, forums, blogs)

- New models haven't emerged yet (what replaces them is unclear)

- LLMs are coasting on past data (they're still useful, but for how long?)

- Nobody is thinking long-term (quarterly earnings > knowledge infrastructure)

The next 5-10 years will be messy. Developers will increasingly feel the limitations of LLMs when working on cutting-edge problems. We'll miss the depth and nuance of Stack Overflow answers.

And maybe — just maybe — some new form of knowledge sharing will emerge from the chaos.

What You Can Do

-

Write more — Blog posts, documentation, tutorials. Create original content that isn't AI-generated.

-

Still use Stack Overflow — Even if you use AI, share solutions back to the community.

-

Support open knowledge — Contribute to open-source projects, documentation, and community resources.

-

Be skeptical of AI answers — They're often wrong in subtle ways. Verify. Test. Think critically.

-

Mentor humans — Share your knowledge directly with other developers. Don't let expertise die in your head.

The Paradox Summarized

Stack Overflow built the data that trained the AI that killed Stack Overflow.

Now the AI has no new data source.

The snake has eaten its tail.

What comes next?

Nobody knows. But we should probably start thinking about it.

What's your take on the Stack Overflow decline? Are you still using it? What platforms have replaced it for you? Drop a comment — I'm genuinely curious how other developers are handling this shift.